Recently I had the privilege of joining the organizing committee of International Workshop on OpenCL (IWOCL) 2019, as a volunteer. The workshop was three days; However, I was excited to attend a conference held in conjunction with IWOCL which was DHPCC++ (Distributed & Heterogeneous Programming in C/C++).

IWOCL: The Push Towards Heterogeneous Programming

The end of the Moore’s law, a mantra in the industry nowadays, fostered the growth of parallel computing. The increased attention towards machine learning has also advocated research in parallel computing. We are now seeing massively parallel devices (such as GPUs) in systems to achieve increased computational power.

With multiple device vendors in the market, programmers are dreadful of locking themselves to a single vendor. This is partly due to the bargaining power it endows the vendor. For example, in the High Performance Computing industry, if a molecular simulation was written in Cuda, a language proprietarily owned by Nvidia, the consumers will be forced to purchase Nvidia hardware for the foreseeable future. The wide spread adoption of Cuda has raised concerns. As a result, most developers are now moving towards open standards such as OpenCL + SYCL (Pronounced as sickle).

As a participant in the audience, I heard concerns from experts at Intel elaborating that the complexity of OpenCL has contributed towards a steep learning curve and impeded wide spread adoption. SYCL is supposedly expected to provide an abstraction layer over OpenCL. Also, the major version upgrade from OpenCL 1.2 to 2.0 has created dissatisfaction in the industry due to the major investment required. For example, upgrading the code to a significantly different version would mean that a considerable amount of hours will have to be re-invested.

There are also multiple open source libraries emerging such as Raja (https://github.com/LLNL/RAJA) and Cocos (https://github.com/cocos2d/cocos2d-x). However, researches and the industry is optimistic about OpenCL.

Heterogeneous and Distributed Computing in C++

People were also very excited about C++20 (The upcoming major ISO release following C++17). However, executors are likely to appear in C++23 (still under discussion and cannot confirm whether this would be final). However, C++20 will have exclusive support for heterogeneous and distributed computing. The existence of lambda functions enables higher level application software to be cleanly coded. Allowing to execute the same code can be executed on multiple hardware. A few engineers also discussed about creating a safer C++ signifying the push towards automated cars (driverless vehicles).

Let’s Teach are Computers to Program

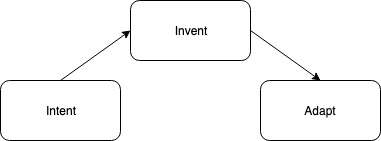

Tim Mattson, from Intel Research, delivered the final keynote emphasizing his dream to see a computer which could translate the intention of a human being in to a program. The process will consist of the following aspects.

- Intent – The human intention of the requirement

- Invent – The algorithm which will be designed by the machine

- Adapt – Optimizations that will be performed by the machine

Are we there yet? I guess we are decades away (worst case may be centuries). But people are now looking at revolutionizing the way we develop data structures. For example, Tim Kraska from MIT and Jeff Dean from Google published the paper on learned indices (https://arxiv.org/abs/1712.01208), where the authors look at replacing a B+ tree (which is a traditional data structure) with a Neural network / regression. Their intuition lies in the fact that the data structure actually acts as a model.

Even though there is a long way for us to go, this is an exciting time to be in. As the foundations of these great innovations will be laid out in the upcoming years defining the future in many ways.